One of the best sessions at the SRLN 2018 conference in San Francisco in February was one on AI, Ethics, and Decision-making. Speaking at it were Angie Tripp, Jonathan Pyle, and Abhijeet Chavan. They raised difficult, necessary questions about how courts are currently purchasing and deploying automated decision making tools.

The most prominent of these are in pretrial services — deciding if criminal defendants should be released or not, or how bail should be set — but they are also being introduced with online dispute resolution systems, with compensation systems, and with other automated decisions about cases being made.

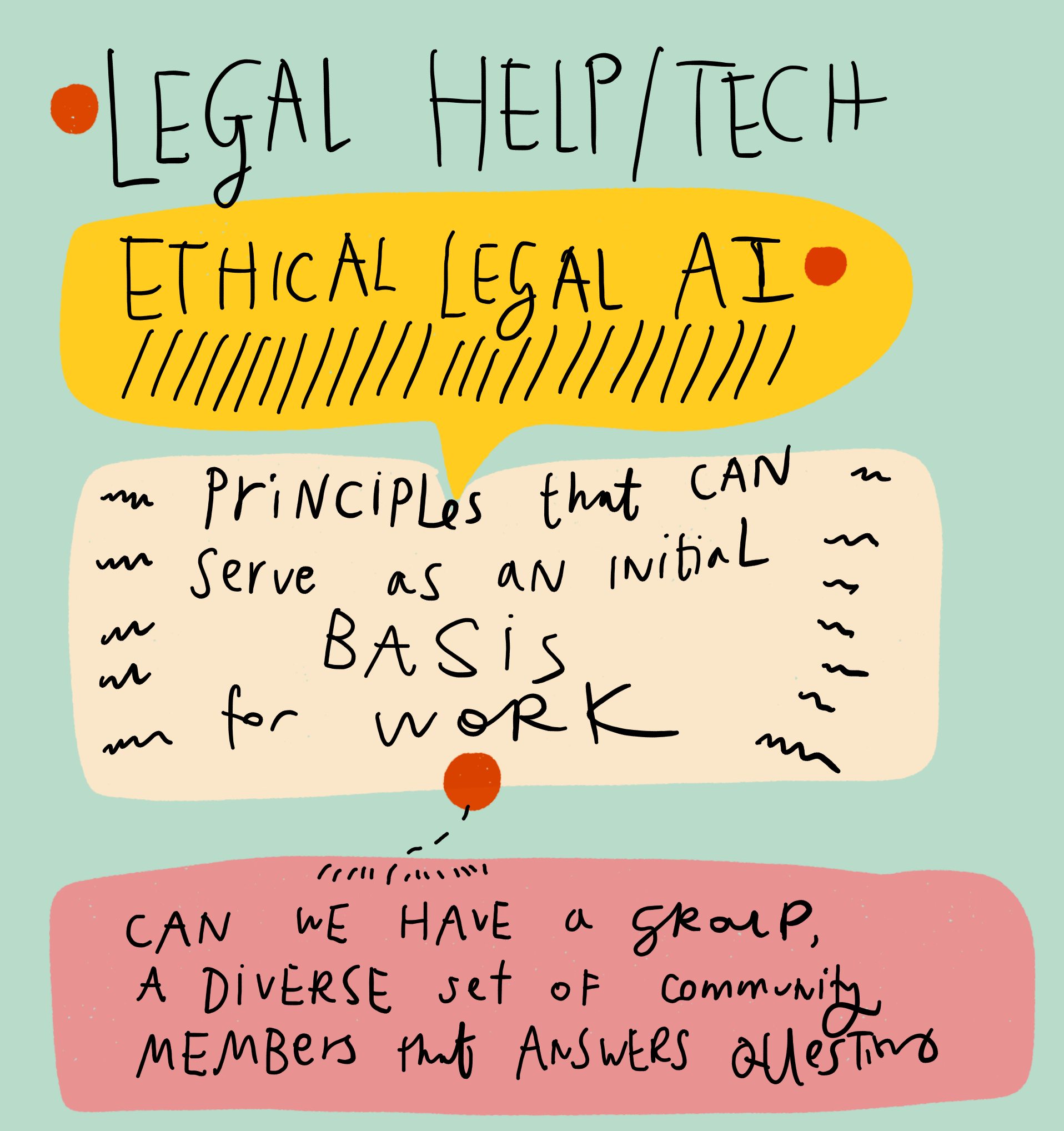

The big takeaway: We need more work to define what values should be baked into these tools, and we need more ways to evaluate what impact these tools are having on people, community, and justice.

1 Comment

It is a very important but also interesting discussion. But both human and AI processes must be examined. We have plenty of process related laws and rules that govern judicial decision making. So we will need to use AI to examine the success and the weights that actual human judges apply now. We can then see what needs to be used/discarded/applied to the machine based systems.

Simply pointing fingers, adopting, or adapting the current approaches is a lost opportunity to examine the situation. Then, of course, we will want to do a lot of parallel testing for both approaches.